video cards

if you are buying right now, buy RadeonHD 5x00 series.

If you are waiting till next march, that's about when Fermi based cards will be entering the market... if everything goes right for Nvidia. As it stands right now though, the general hard-core gamer guess is that Fermi's architecture actually isn't going to be that great for gaming. Nvidia's own documentation on the chip avoids talking about gaming applications and talks more about computational (OpenCL, DirectCompute, GPGPU) uses.

If Nvidia's actually able to make a March launch, which some Original Design Manufacturer's and Original Equipment Manufacturer's are really doubtful of, they'll only be launching a month or two before the planned product refresh of the RadeonHD 5x00 series. The high-end video situation has some Nvidia investors wondering if the company will survive on it's own, or if Nvidia is attempting to re-position itself to be bought up as a technology development company. As is, Nvidia has already publically pulled out of some of it's former strongholds, such as chipset development, and is focusing more on it's integrated technologies such as it's ION and Tegra platforms.

The other big problem is that if Nvidia actually does make a March launch with Fermi based cards, they'll be competing with Intel's Project Larrabee. Now, we don't actually know much about the performance and price points Larrabee cards will be launching at. We do know from Epic Software, the guys behind Unreal Tournament, that the Intel cards will likely be targeted at the mid-range $100-$200 market segment, and that the silicon is capable of pushing UT3 at 1080p with the full detail levels.

***

As to your other question... no. The current graphics engine in City of Heroes doesn't actually leverage Crossfire or SLI'd graphics cards setup.

However, we do know in an off-hand manner that the upcoming Ultra Mode does leverage multiple GPU's. The demonstration given at HeroCon was based on Crossfired RadeonHD 4870x2's.

That being said, if you read through the Going Rogue Information thread, it was reported that according to Ghost Falcon, Ultra Mode was capable of running on just a single RadeonHD 4850. The resolution it'd be running at wasn't specified, so it could be that the 4850 could only do it in 640*480. Doubtful... I'm guessing 1680x1050 is more likely given what the 4850 can manage in other games, and Crossfired 4850's can probably hit 1920x1200.

***

As to the final point... the GTX 295 does use 2 cores, and the cheapest one starts at just above $460 after a mail in rebate.

AMD / ATi are lining up a direct competitor, a 5870x2 if you will. Images of it have already been released / leaked. One difference you might notice is that unlike the GTX 295, the 5870x2 is a single slot solution... which means if you really wanted too, you could make a quad-crossfire setup with it for 8-GPU's. You'd be broke... but hey... how cool would that be?

Pricing and availability is still unknown. Because the RadeonHD 5x00 cards are the clear better choice to purchase right now, you'll actually have a hard time buying one. Newegg is completely sold out, although I did find a couple for sale through Pricewatch.

The 5870x2 is likely to be the same story. It's possible that vendors such as Dell's Alienware and HP's VoodooPC will be getting the majority of initial 5870x2 shipments, leaving few for the retail channel.

So, is crossfire/SLI talking about one card with two chips on it? Or is that talking about two video cards, no matter how many chips per card? Maybe I'm not understanding correctly what GPU means, specifically.

If I get a vid card that has two chips, will CoX use them? or will it only use one of the chips?

Does Pentium i7 950 work well with radeon cards?

I've had the impression, over some years, that Radeon cards suffer from teh suk when it comes to drivers. Complaints of messed up ones, and never any updates. Is that the way it is now, with the current high end cards? How do you think it will be for the ones you mentioned coming out soon?

Thanks for the info you shared so far.

__________________

whiny woman: "don't you know that hundreds of people are killed by guns every year?!"

"would it make ya feel better, little girl, if they was pushed outta windows?" -- Archie Bunker

|

So, is crossfire/SLI talking about one card with two chips on it? Or is that talking about two video cards, no matter how many chips per card? Maybe I'm not understanding correctly what GPU means, specifically.

|

***

- Graphics Card

A graphics card contains your physical GPU and all of the external controls and circuitry required for the GPU to work and make a picture

***

- GPU

GPU stands for Graphics Processing Unit. This is the physical chip that does all of the graphics calculations.

Multi-GPU stands for Multiple Graphics Processor Units. It means you have more than one chip that calculates the graphics in your computer.

***

- SLI

A few years back Nvidia bought up 3DFX and began working on Multi-GPU technology again. They branded their new technology SLI, for Scalable Link Interface.

SLI is used as a branding term for any Multi-GPU technology produced by Nvidia. If you have more than one physical processor that calculates graphics, the technology required to manage each physical processor and make the processors work together is called SLI.

***

- Crossfire

Crossfire is AMD's / ATi's brand name for managing multiple GPU's. If you have more than one physical processor that calculates graphics, the technology required to manage each physical processor and make those processors work together is called Crossfire

***

You'll also find terms like:

- Triple SLI - This means you have 3 Nvidia GPU's

- Quad SLI - This means you have 4 Nvidia GPU's

- Triple Crossfire - As you'd expect, this means you have 3 ATI gpu's

- Quad Crossfire - and finally, 4 ATi gpus

***

Now, each current vendor, ATi and Nvidia, sell single graphics cards with 2 GPU's on each card. So it is possible to have a Quad-SLI or Quad-Crossfire setup with only two physical add-in graphics cards.

***

| If I get a vid card that has two chips, will CoX use them? or will it only use one of the chips? |

| Does Pentium i7 950 work well with radeon cards? |

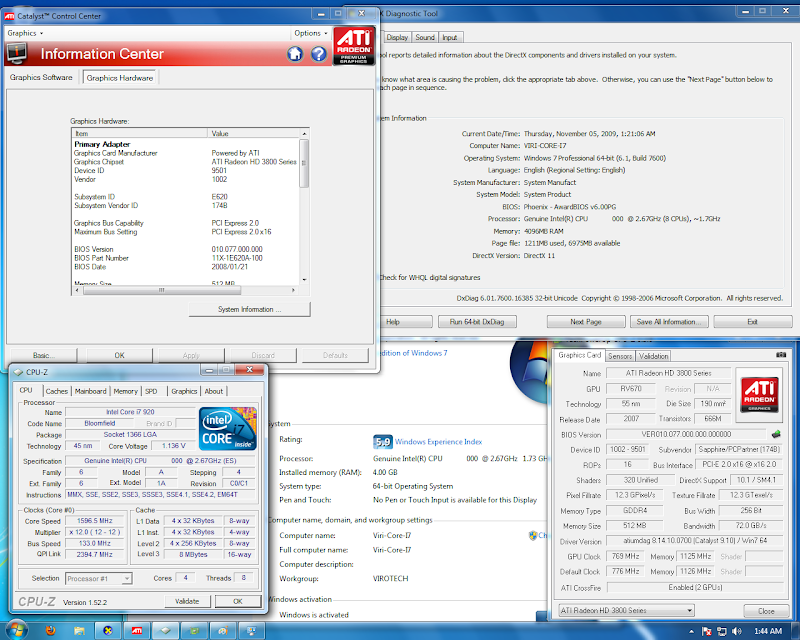

And yes, I can say that it does. I've got a Core I7... something, well, let me turn that computer on and take a screen shot. Be a minute.

And... here it is.

***

| I've had the impression, over some years, that Radeon cards suffer from teh suk when it comes to drivers. Complaints of messed up ones, and never any updates. Is that the way it is now, with the current high end cards? How do you think it will be for the ones you mentioned coming out soon? |

That ATi cards were messed up, however, has been true with OpenGL till a couple years ago. Back when ATi retired the Radeon 8500 series cards, which was Nov. 15, 2006, and they did so because an OpenGL rewrite was in the cards. That re-write hit in 2007 : http://www.phoronix.com/scan.php?pag...item=914&num=1

And... the results were spectacular. The OpenGL performance skyrocketed on many ATi cards, with some averaging over 100% performance increases. From a performance standpoint, ATi had indeed caught up with Nvidia on OpenGL support.

If you go back and look at the archived drivers here: http://support.amd.com/us/gpudownloa...eonaiw_xp.aspx :: and then compare them to Nvidia's drivers here: http://www.nvidia.com/Download/Find.aspx?lang=en-us :: You'll actually find that AMD / ATi has been far more regular with driver updates than Nvidia. AMD / ATi hasn't actually missed a monthly driver update for... Windows since... well. Going all the way back beyond the Catalyst 6 series from 2006. I want to say that ATi's regular driver updates started in 2004 / 2005.

You'll also find that Nvidia's been the one with broken drivers, such as this article demonstrates when putting Nvidia's 64bit drivers versus ATi's 64bit drivers: http://www.mepisguides.com/Mepis-7/h...ati-64bit.html

****

Now, I've had lots of Nvidia cards and lots of ATi cards. Of the ones I've personally owned for myself, and used in my own systems:

Nvidia:

Riva TNT2

Geforce 2 MX (pick a varient, I've had them all)

Geforce 4200 TI Factory Overclocked (then overclocked to 4600 GPU speed, 4400 memory)

Geforce 6600 GT (2 of them, but AGP factory overclocked)

Geforce 7900 GT KO (factory overclocked)

Geforce 6800 Mobile

Geforce GTS 250 (Triple SLI)

ATI:

IBM Oasis (don't. ask. just don't.)

Rage 128 AIW

Radeon 7500

Radeon 8500 AIW

Radeon 9600 XT

Radeon 9600 Pro

Radeon 9800 Pro

Radeon 9800 AIW

Radeon 9800 XT

Radeon x1800 XT

Radeon x1900 AIW

RadeonHD 2600 Mobile

RadeonHD 3870 (Crossfire)

RadeonHD 4850 (Crossfire)

Now, you might notice that I've had a lot more ATi cards than Nvidia cards. That's because, in my own personal experience, the Nvidia cards and their drivers have invariably sucked like a Hoover vacuum cleaner.

The 6600 GT's, for example, howled like Banshee's. I actually wound up sacrificing 4 slots for each card in order to use a water-cooling solution. One of the big problems with the 6600 GT AGP edition cards is that they used a unique fan mounting design in order to make room for the PCIE to AGP bridge chip... and the only cooler I ever found that would work was a self-contained Thermaltake Tide-water cooler.

The 7900 GT KO, great card. Only, it wasn't really that much faster than the x1800 or x1900 cards I had, and required a heftier power-supply.

The GTS 250's... again. Great cards. They perform wonderfully on the system I've got them hooked up at... yet... when I was buying them... they were all dual-slot solutions. I couldn't find any vendors that sold a single slot GTS 250... Yet I had no problems finding vendors that sold single slot RadeonHD 3870's or Single Slot RadeonHD 4850's... which were in the same price range. This might not sound like a big deal, but the 3 GTS 250's I've got literally take up so much space, I've got no room to plug in the cables for my front USB ports or my front speaker ports, and there's no physical space to place a real sound card for the computer... and I'm using a full ATX board in a FoxConn chassis.

On the other hand, the ATi cards have been... well. I can't honestly say I've ever had any driver problems with them except in games where Nvidia had a hand in the development... say like Planetside... but that was also buggy on Nvidia cards too, but a different kind of buggy.

***

Soo, looking ahead to the future... I seriously question Nvidia's commitment to the hard-core gamer. I really do question just how good, or bad, Fermi is going to be for gaming purposes. Honestly, I'd feel a lot more comfortable if Nvidia was able to demonstrate Fermi's architecture working in IKOS.

For those who don't know, IKOS is a brand name for a silicon pre-production system. Basically it's a hardware setup that allows developers to mimic the hardware structure of a silicon that's to be fabbed before the silicon is actually fabbed. Most vendors use an IKOS box, or a similar system, to verify that an architecture works as expected before sending it off to be fabbed. Nvidia has, as far as I'm aware, yet to demonstrate that Fermi works even in the silicon emulation level.

***

By the same token, I'm somewhat... leery... of what AMD's going to be doing with their drivers. I've gotten some doublespeak recently from them with Catalyst Maker (Terry) saying that yes, OpenGL support was still a priority for the dev team, and others who said that OpenGL support was really only going to be targeted for the WorkStation market... coupled with Matthew Tippett leaving for Palm, and I wonder if AMD will continue to push OpenGL performance... which is important to CoH, and as I feel, important to the gamesindustry as an overall technology.

I'm not exactly comfortable with the idea of Intel being the main graphics vendor pushing OpenGL...

***

Okay, does that answer your questions about the subjects?

Just to add a tiny bit here...

Without going too much into which is better per se, I've never experienced nearly as many problems with my nVidia cards as I have/had with ATI. I'm just posting this to show that everyone is going to have different experiences

On that note, je_saist that was very well put together information. Not just for the OP, but anyone else who might have had these questions. Thanks for putting in the effort!

We'll see....

OMG je_saist, thank you SO much for all of the great info!

Well, I think I'd better get plannin' on using some Radeon cards sometime soon. Wouldn't be fair to never try them after I've found out as much as I have on them.

If you have time for a quick answer, I guess my last question is: are the 5870s worth waiting for (any idea how long a wait we're talking about here?) or should I tell the computer builders to stick in four current high-end Radeon cards?

My current plans are to use a fairly-good vid card in the new compy to tide me over to the next big thing, but if the current high-end crossfire stuff is good enough. . . I guess I'm asking for an opinion.

Thanks, again, for all your shared info, I luv ya, man! (girl?)

__________________

whiny woman: "don't you know that hundreds of people are killed by guns every year?!"

"would it make ya feel better, little girl, if they was pushed outta windows?" -- Archie Bunker

|

If you are waiting till next march, that's about when Fermi based cards will be entering the market... if everything goes right for Nvidia. As it stands right now though, the general hard-core gamer guess is that Fermi's architecture actually isn't going to be that great for gaming. Nvidia's own documentation on the chip avoids talking about gaming applications and talks more about computational (OpenCL, DirectCompute, GPGPU) uses.

|

| As is, Nvidia has already publically pulled out of some of it's former strongholds, such as chipset development, and is focusing more on it's integrated technologies such as it's ION and Tegra platforms. |

(I'm poking through these threads since I have a system build coming up. Haven't decided if I want to run AMD or jump to Intel - not least because of money, but partially because of Socket Madness that's traditional to Intel. I can throw the $150-$200 I'd save off of the Intel system towards a better video card, after all. Current system - AMD, 3+ years old, and just barely at the end of its supported CPU upgradeability.)

Intel doesn't want anyone to make chipsets for the Socket 1366 or 1156 series of CPUs other than Intel. I think this is also true about the laptop versions of those CPUs. This locks nVidia out of the "but my integrated graphics stomps your integrated graphics into last week" laptop and desktop chipset market but they can still sell discrete laptop GPUs.

This annoys nVidia big time and is also now forced to license the magic word to motherboard manufacturers that will allow those motherboards to enable SLi code in nVidia's drivers. nVidia makes considerably less money selling magic words than chips. But without SLi support, who is going to buy multiple high end nVidia cards?

The biggest "surprise, didn't see that coming" market for high end video cards is Wall Street who are using them to build super computer trading systems that buy stock on news milliseconds before the competition and then sells to that competition for an immediate gain seconds later for instant profit.

Rumor is nVidia may have a video card with their G300 GPU to show before the end of this year. Nothing about when you will be able to buy one but it sounds like the first batch of G300's are allocated for Wall Street and not die hard gamers. The new price point for high end video cards as set by ATI's HD 5870 is discouraging nVidia's manufacturers from building the top end cards because the fat profit margin there is quite thin now. So it's been getting tougher to find GTX 275/285/295 cards from big name players other than eVGA for some reason, I'm guessing price, eVGA really haven't cut theirs.

ATI has hit a snag with their 5xxx series cards, two actually. First they didn't plan they will be so popular so they didn't order enough 40nm wafers the chips are made of and second the Fabs producing them are having manufacturing problems at 40nm so yields are horrible making the shortage even worse. So it's tough if not impossible to find the HD 58xx series cards. The GPUs for the HD 57xx series don't seem to be in short supply but they are literally half of an HD 58xx GPU in size and complexity.

Basically it sucks now if you are looking at top shelf video cards.

It's also tough to find a mid to high end video card that isn't dual slot, explicitly (double wide bracket) or practically (single width bracket but huge bolted on heatsink interfering with the next slot. Problem is simple. The more powerful the GPU, the more power it uses and the more heat it produces. For an air cooled solution the more cooling fin area you have the better. Also more area generally means a less noisy/slower fan can provide cooling. Add this all together and poof, double wide video cards starting at $100-125.

Multiple GPU support is not automatic in games. However games aren't coded to know if multiple GPUs are in the system but they do need to be coded so the CPU has time to kill between the last command it issued to the GPU and the GPU finish rendering the frame. Why, because if you have a second, third, etc. GPU in the system, the CPU can use that time to start issuing commands for the next frame to the next GPU in sequence. It also helps if you are pushing the GPU with crazy sick shaders, a large resolution (lots of pixels) as well as multisampled anti-alias and anisotropic filters to maximize the time it takes the GPU to finish the frame. The more the CPU waits on a single GPU system, the more a multi-GPU system will help framerate.

This is also why two lower level video cards show such a performance boost in multi-GPU setups. Or when the CPU is much, much faster than the GPU. Again, the longer the CPU needs to wait for the GPU to finish, the more multiple GPUs help.

This is why it's not an automatic "home run". It depends on the game. For our game I'm not sure if the GPU is simply not pushed hard or the engine is coded to command the GPU bits at a time leaving little time between the last command and the frame finishing.

However I'm going out on a limb here and say that full volumetric shadows is an expensive post process effect along with more realistic reflection in buildings and water. I think it will be safe to say that those two effects are going to load a ton of work on to the GPU at the end of each frame. Because of this I'm guessing we will see a more obvious improvement in framerates on multi-GPU rigs in the Going Rogue expansion than we do today. How much? I can't say.

Father Xmas - Level 50 Ice/Ice Tanker - Victory

$725 and $1350 parts lists --- My guide to computer components

Tempus unum hominem manet

|

I'm wondering if that's not just because they want to be seen as *more than* a gaming company. The GPU's been turning into just "more processors" for a while now (Folding@Home, for instance, can use some of them for computations, as can some graphics apps - just off the top of my head.) So, really, pushing that would (to me) make a bit more sense than just "You can push 5000 FPS over 9 screens @192000x14500" as far as what they want to highlight.

I was under the impression that was a result over the stink between them and Intel over chip support, patents/licensing or some such. |

These two parts are actually related. Nvidia doesn't just want to be seen as something other than a gaming company... they have to be seen as something other than a gaming company.

The reality is, the stink over the Intel chipset licensing was a bit of a red herring. The short version is that Nvidia claimed that their pre-existing licensing for the system bus used on the Core platform also gave them license to the use the Front Side Bus developed for the Nehalem architecture. Intel said no, it didn't. Intel didn't actually explicitly tell Nvidia that they could not ever have a license for the Bus Logic system used in the Nehalem architecture. Intel just told Nvidia they couldn't use their pre-existing contracts.

What this meant is that in order to have chipsets for Core I7 processors Nvidia would have to come to a new contractual agreement with Intel... and Intel had set that price higher than Nvidia was willing pay.

The other problem with Nvidia's lines about why they were leaving the chipset market is that they abandoned the AMD platform. Not even 2 years ago the best AMD motherboards were shipping with Nvidia chipsets. Then, when Socket AM3 hit, Nvidia announced several chipset solutions... then never delivered.

If Nvidia had been serious about Intel's licensing being the reason why they left the chipset market, they would have been pushing their chipsets for both AMD and Intel platforms at the same time. They weren't. They had already axed development of one branch, and evidently, were looking to axe the other in a convenient manner. Now, I can't fault Nvidia for leaving the chipset market. The other third parties like Via and SiS had been winding down chipset support for other processors for years, and Nvidia took Uli out of the market. I can, and do, fault Nvidia for not being straight-forward with their investors about why Nvidia left the chipset market.

***

Now the reason(s) behind these product moves are partially found in both Intel Larrabee and AMD Fusion. Part of the goal of the Larrabee project wasn't just to develop a competitive performance graphics solution based on the x86 architecturer, it was also to merge an x86 based GPU onto an x86 based CPU. Both AMD and Intel will be launching Central Processors that include integrated graphics... on the same physical chip.

These new CPU/GPU hybrids will virtually eliminate many of the design issues OEM's have with current integrated graphics motherboards. The GPU will be able to take advantage of the processor's cooling, and the display hardware and memory access components will be greatly simplified. The design changes will free up wiring going to and from the Central Processor and System chipset.

In the Integrated graphics world as pictured by Intel and AMD, a third party graphics solution doesn't actually exist. There's no design space where a third party like Nvidia, SiS, or Via could plop in their own graphics solution. This means that the only place for a 3rd party graphics vendor, within just a couple of years, is going to be as an add-in card vendor.

From Nvidia's point of view this wouldn't quite be a bad thing since AMD / ATi is their only real competitor. Via's S3 Chrome series never gained any traction. PowerVR is only playing in the mobile segment if memory serves correctly, Matrox is only interested in TripleHead2Go, and 3DLabs hasn't been heard from since 2006.

However, with Intel entering the add-in card market, things don't look so rosy for Nvidia. One of the (many) reasons Intel's current graphics processors resemble an electrolux is that an integrated GPU can only run at limited clockspeeds, and in Intel's case, they don't actually make a GPU, they only make graphics accelerators that still leverage the CPU for some operations. In an external card though, Intel can crank the clockspeeds and cores... and Intel's gotten really good at software rendering through x86. As a mid-range part, Larrabee based cards are a nightmare for Nvidia.

The fact is, the largest selling graphics segment.. is mid-range and low-end cards. Nvidia and AMD make very little money off of their high-end cards. Most of their operating cash comes from the midrange sets, like the GeforceFX 5200, Radeon 9600, Geforce 6600, RadeonHD 3650, and so on.

With a potentially legitimate, and humongous, competitor coming up in what has traditionally been the bread-and-butter for most graphics vendors, the market Nvidia thought they could sell into was lessened. Nvidia also had other issues, such as bad reputation with ODM's like Clevo and FoxConn, and OEM's such as Asus, Acer, HP, and Apple, over the issues with various NV4x, G7x, and G8x GPU's having (drastically) different thermal envelopes than Nvidia originally said those parts had. Just look up Exploding Nvidia Laptop.

***

As a company, Nvidia's market strategy needed to change. They need to become something other than a graphics card vendor. They couldn't depend on Intel's GPU entry being a complete mongrel. Learning that Epic Software, the guys behind one of the engines that built Nvidia as a gaming company (Unreal Engine), was involved in helping Intel optimize Larrabee for gaming had to land like a blow to the solar plexus. While I'm not saying that Epic's involvement is magically going to make an x86 software rendering solution good... I think Intel is serious about getting Larrabee right as a graphics product... and when Intel gets serious about a product, they generally deliver. E.G. Banias into Core 2, and then the Core 2 architecture into I7.

As a company, I can't really figure out what Nvidia's ultimate strategy is going to be. A couple months ago I would have throught they were trying to get into the x86 processor market, and produce a competitor to both the Intel CPU's and AMD CPU's, essentially becoming yet another platform vendor. I think, emphasis on think, these plans have been scrubbed.

It's been more profitable for Nvidia to focus on offering multimedia platforms, such as the Tegra. If rumors are to be believed, Nvidia may have even landed the contract for the next Nintendo handheld platform... a potential slap in the face to rival AMD whose employees have been behind the N64 (SGI), GameCube (ArtX), and Wii (ATi) consoles, and who were at one point contracted to build a system platform for the successor to the GameBoy... a project that fell through when the DS started flying off shelfs like it was powered by redbull wings.

It's the opinion of some analysts, and myself, that maybe not even Nvidia themselves know what direction they are going to take in the coming months. While it would be disappointing to see Nvidia leave the gaming card market, it is perhaps one of the options on their table.

| (I'm poking through these threads since I have a system build coming up. Haven't decided if I want to run AMD or jump to Intel - not least because of money, but partially because of Socket Madness that's traditional to Intel. I can throw the $150-$200 I'd save off of the Intel system towards a better video card, after all. Current system - AMD, 3+ years old, and just barely at the end of its supported CPU upgradeability.) |

On the other hand, I've got an Asus M2R32-MVP, which uses the Radeon Xpress 3200 chipset, which was a Socket AM2 motherboard from 2006... that's happily chugging away with a Phenom 9600.

|

if you are buying right now, buy RadeonHD 5x00 series.

If you are waiting till next march, that's about when Fermi based cards will be entering the market... if everything goes right for Nvidia. As it stands right now though, the general hard-core gamer guess is that Fermi's architecture actually isn't going to be that great for gaming. Nvidia's own documentation on the chip avoids talking about gaming applications and talks more about computational (OpenCL, DirectCompute, GPGPU) uses. ~~~Snip~~~ |

Having a Card thats able to support it right off the line might be a good idea.

|

Going Rogue Might be moving CoX to the OpenCL platform instead of the proprietary PhysX system.

Having a Card thats able to support it right off the line might be a good idea. |

How it's exposed is a bit odd though. Nvidia is currntly exposing OpenCL support through CUDA, while both Intel's and AMD's drivers expose OpenCL directly.

Nvidia's upcoming drivers are expected to expose OpenCL directly as well.

***

edit: correction, I'm getting ahead of myself ATi's driver is currently exposed through StreamSDK : http://www.phoronix.com/scan.php?pag...item&px=NzYwOA : and the driver hasn't actually been merged to Cataylst.

***

double edit. While I'm pretty sure support for OpenCL on Intel is included in Apple's Snow Leopard, I'm not able to find a Windows / Linux code release or mention... so I might be mis-reporting comments going through #intel-gfx

***

triple edit: ah, here's the Intel code reference I was thinking of : http://software.intel.com/en-us/foru...s/topic/68140/

| RapidMind currently targets CUDA, but I think a future version (maybe after we merge RapidMind with the Ct Technology project in Intel) should target OpenCL. I'd hope that would happen in 2010 - making that happen will depend on the maturity of OpenCL implementations we see appear from various companies. |

|

Going Rogue Might be moving CoX to the OpenCL platform instead of the proprietary PhysX system.

Having a Card thats able to support it right off the line might be a good idea. |

Problem is the game's physics engine would need to be replaced unless nVidia chose to release an OpenCL version of PhysX. Problem with that is that would cause nVidia to lose a unique marketing point that they have been beating the trades over the head with since they had very little else to talk about for months. Considering that nVidia's current crop of drivers disable PhysX support if an ATI card is also found in the system (for those who wished to use ATI for graphics but nVidia for PhysX) it doesn't seem they are ready to share PhysX support yet.

Of course the is moot since the API of PhysX the game uses, to the best of my knowledge, doesn't work with CUDA based PhysX either. Now if the devs state that they are swapping out PhysX for an OpenCL based physics engine (Bullet perhaps?) great. Just don't jump to the conclusion just because the GR demo was done on an ATI card or reports that an NCSoft studio (it's a big company, lots of development studios) is looking at OpenCL for a different game means that OpenCL is coming here.

Father Xmas - Level 50 Ice/Ice Tanker - Victory

$725 and $1350 parts lists --- My guide to computer components

Tempus unum hominem manet

Father Xmas: been pushing the developers for an official comment on what the stance of accelerated physics development is, if they intend to remain with PhysX or swap it out. So far... no official comment

*pulls out the 15ft poking stick and tries to get within range of a Wild Positron*

|

Like to know where you heard that. Wait found your previous posts on the subject.

Problem is the game's physics engine would need to be replaced unless nVidia chose to release an OpenCL version of PhysX. Problem with that is that would cause nVidia to lose a unique marketing point that they have been beating the trades over the head with since they had very little else to talk about for months. Considering that nVidia's current crop of drivers disable PhysX support if an ATI card is also found in the system (for those who wished to use ATI for graphics but nVidia for PhysX) it doesn't seem they are ready to share PhysX support yet. Of course the is moot since the API of PhysX the game uses, to the best of my knowledge, doesn't work with CUDA based PhysX either. Now if the devs state that they are swapping out PhysX for an OpenCL based physics engine (Bullet perhaps?) great. Just don't jump to the conclusion just because the GR demo was done on an ATI card or reports that an NCSoft studio (it's a big company, lots of development studios) is looking at OpenCL for a different game means that OpenCL is coming here. |

|

Father Xmas: been pushing the developers for an official comment on what the stance of accelerated physics development is, if they intend to remain with PhysX or swap it out. So far... no official comment

*pulls out the 15ft poking stick and tries to get within range of a Wild Positron* |

If the game spit out 20 dollar bills people would complain that they weren't sequentially numbered. If they were sequentially numbered people would complain that they weren't random enough.

Black Pebble is my new hero.

I am having a new computer made soon, and I am considering my options for video cards in the immediate future, and in the near future after that.

What I'm wondering is, does running CoX benefit, or use the benefit, of multi-GPU video cards? I've heard that some games, if you had something like a GeForce 295, (I think that one has two chips in it?) some games only use one of them. Something about SLI? I'm not sure how to use that acronym.

Anyway, just wanting to make a good decision for my next vid card, and when Nvidia comes out with DX11 cards, I want to be able to pick a good vid card (or two?) at that time.

Thanks for any info y'all can share about how this game uses hardware like vid cards.

__________________

whiny woman: "don't you know that hundreds of people are killed by guns every year?!"

"would it make ya feel better, little girl, if they was pushed outta windows?" -- Archie Bunker