-

Posts

4197 -

Joined

-

-

Quote:Unfortunately Peacemoon, Panzer is right, and you are wrong.Sorry, got to disagree. It is a valid point. Things that seem easy and trivial to jaded vets, can seem impossible for others. You're right, usually the spawns in the reactor are defeated in seconds and the team just stands around twiddling their thumbs looking at the clock & waiting for the next one. But not every team out there can defeat them in seconds.

Case in point, I tried to help 2 new players who were asking about the respec trial. They had completely messed up builds, and knew it. I've done the respec trial dozens of times. I was completely confident going in to it that no matter how bad their builds were, as long as they actively assisted me, we'd be fine. Nope. Failed on the last wave when the reactor was defeated. Those two players simply could not stay on their feet and I couldn't defeat the remaining enemies fast enough to protect the reactor.

The respec trial has to be balanced around the minimum required of players to start it, and with some assumption that those players might have less than effective builds.

Respec trial not enough of a challenge for you? Crank up the difficulty. Run it at +2. Or if you have less than a full team, run it at x8. Personally, I find most TF's pretty boring unless we're running at least +1.

Um... yes it does. The trial seems tedious and boring because many players who run the trials don't have builds that are completely ruined, or they have influence to spend on enhancements and haven't slotted themselves up with enhancements that do pretty much nothing.Quote:First, the respec trial is tedious and boring. This has nothing to do with difficulty.

If you approach the trial from the perspective of a group of players with only one or two that have good builds, difficulty is a huge factor.

and what happens if you have a group that can't defeat that steady stream? What happens if your group runs out of endurance and you need a moment to rest?Quote:I would much rather a constant stream of enemies to fight even if they were -2 or -3;

Yay! You run with teams that can kill off all the mobs before the next spawn arrives! Yay you!Quote:rather than a 3 minute gap between waves that leaves us literally wasting our time for 30 minutes.

Good thing the developers don't balance the game for everybody who succeeds at events.

Um... try actually talking to your team-mates.Quote:At least we would have something to keep us entertained.

I'm going to be brutal here with what I say next: Most of the players running respecs these days aren't doing the trials to correct bad builds.

Most players are running respecs to UNSLOT. They've packed in a couple procs or IO sets that they don't want to lose and want a respec to get that valuable IO back.

Yes, I realize that I am making a sweeping generalization here, and I normally detest when other players make similar sweeping generalizations without direct proof of the statement. I make this particular generalization based on the badge-channel traffic of 5 different US servers. Most respecs that I'm aware of, have participated in, or heard about, were basically started because the leader of the trial wanted to unslot.

Now, could I actually prove this statement numerically? Oh no. I don't have access to the figures of how many teams start the respec trial, how many teams fail the respec trial, nor what the average player does with a respec after a respec trial.

I can state, that from a strictly numerical standpoint, the respec trial hasn't changed from from the I5 and I6 days. Many of the time sheets I made back then are still valid reference sheets for the trial today.

I, however, have changed. My tanks have a better handle on how much aggro they can hold. My blasters have a better handle on how far away from they fight they can be, and which inspirations are best for which situations. My defenders have a better handle on who to buff, when to buff, who to debuff, and when to debuff. My controllers have a better handle on controlling, my scrappers have a better handle on damage dealing. I have become a better player.

I've also become more intelligent with slotting. I already know roughly in advance which powers are going to be most effective with whatever available enhancement the power has.

All of this has a combined effect. The same task that I struggled at pulling off literal years ago is now easy for me, not because anything change on the game end, but because I changed on my end.

As Panzer points out, a player who doesn't have the play experience, the teaming-experience, or the meta-knowledge, will likely be in the same position I was years ago: wondering what the heck is going on and biting the dust.

* * *

My overall opinion then is that the Respec Trial itself does not need to be changed.

However.

That does not mean that there is not room for negotiation here. So here's my proposal: Implement a new Task Force for the reactor.

As a task force it won't carry the Trial rewards, so no respec reward. However, it will carry an increased merit reward.

For those who like the defend the objective gameplay that the Respec Trial Teases: go ahead and set this new task-force up to have a never-ending stream of enemies. As soon as one enemy is defeated, another spawns in.

This approach of creating a new experience allows the developers to keep the Respec Trial where it is: a Trial that can be done even by players that have been leveled to 50 in AE.

It also allows the developers to "please" those who want an epic combat experience in the reactor. -

Quote:We may, or may not ever know.Shows how much I pay attention. I wonder what the "straw that broke the camel's back" was? I mean there were tons of crazy tags, and people were griping about neg rep attacks all the time. Did some one neg rep/tag one step too far?

Dark One expressed a theory that the developers and publisher were looking forward towards Going Rogue and deciding to go ahead and bite some bullets "now" in preparation for new players dropping by the forums, some who might have never played an MMO before.

Ergo, it may not have been a straw breaking the camel's back... but more of a "we are going to have to do a cleanup at some point in time, might as well be now"

As to how people feel on it... well... the mods have already locked one how do you feel about it thread and have "politely" asked everybody to respond in the official response thread. That response thread can be found here: http://boards.cityofheroes.com/showthread.php?p=3064874 -

-

Quote:AA+AO was fixed on the OpenGL 3.x cards (HD2xxx - HD4xxx) a couple driver revisions ago.Just to add to je_saist's suggestions, a lot of people find it useful to uninstall the video card drivers, then boot into safe mode to run driver sweeper and manually delete anything that might have been left by driver sweeper while in safe mode: usually just the ATI folder should be left but occasionally you might find other remnants if you've been installing your drivers over top of one another instead of uninstalling the old ones then installing the new ones.

As far as the suggestion of using 10.6, that would be good to see if your OpenGL drivers are stable, but I've found that the 10.7 one have corrected then AA/AO bug that's been plaguing all of us ATI owners since the launch of i17.

I just installed and tried 10.7, but it seems some OpenGL operations are borked. It also conked out for me on Unigine, I suspect due to the usage of tessellation by default now. -

Quote:okay then. sounds like we are getting somewhere.But OpenGL would not run it would freeze my comp everytime i tried to start it

It's now "sounding" like your OpenGL driver is corrupted.

First: go here and download driver sweeper: http://www.guru3d.com/category/driversweeper/

Second: Go to your control panel, then programs, then uninstall all ATi software.

The system will ask you to reboot. Don't.

Third: Run Driver sweeper and select ATi

Now Reboot

This should wipe out all installed software.

reinstall the ATi drivers.

this should load in a new OpenGL driver.

Also: use the Catalyst 10.6 drivers. -

I think your picture is broke.

ppppppppppppppppbbbbbbbbbbbbbbbbbbbbbttttttthhhhhh -

... I honestly don't know what to tell you. Nothing seems odd in either report.

... I do think there is something I can have you do to try and uncover the problem.

Go here: http://unigine.com/download/

Download and run the Heaven Demo 2.1.

This demonstration allows you to choose both DirectX 11 and OpenGL API's to run in. If you could, run the benchmark under both DirectX 11 and OpenGL, then post the results here. -

-

Quote:okay. Seems like we have another problem on hand then.i actually just upgraded from 10.6 to 10.7 see if it would fix the problem but it was exactly the same

Going down the checklist of items: is everything in your catalyst Control Center set to Auto or Let the Application decide?

Are you running any kind of anti-virus software?

Can you run both CoH-Helper and HijackThis?

http://home.roadrunner.com/~dasloth/CoH/CoHHelper.html

http://free.antivirus.com/hijackthis/ -

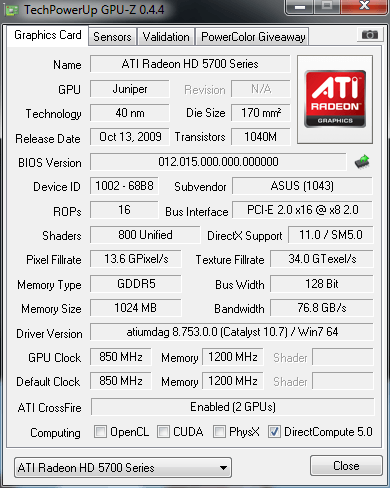

Quote:according to that you are already running Catalyst 10.7.Is this what you need? http://www.techpowerup.com/gpuz/a2udw/

sorry if im doin this wrong im new to any kind of forums

Could you drop back to Catalyst 10.6 and see if the problem replicates itself on the previous driver? -

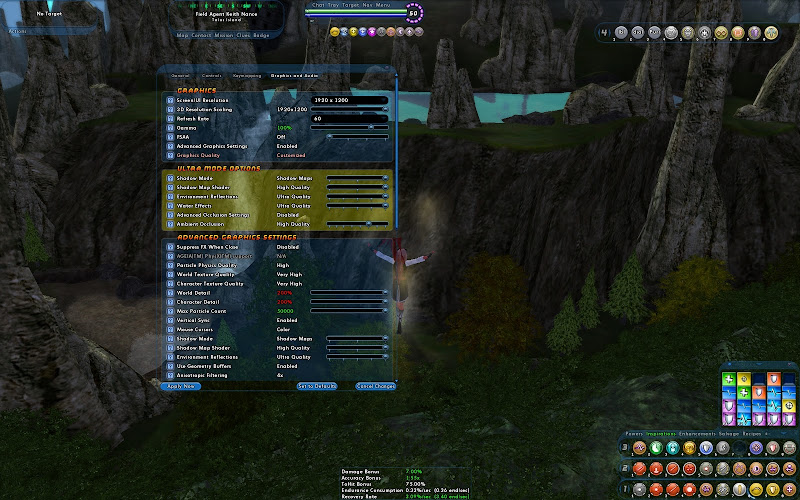

Quote:Given that a 5770 can drive 1920*1200 ~25fps average against the same processor with the following settings:So i just subscribed to the game and i am having a few problems performance wise and wanted to know if this was normal, when i turn world detail to max 200% i only get 10-15 fps the same thing happens when i turn on ambient occlusion i tried them separate but no matter what i only get 10-15 fps, is there something i can do to fix that or is my computer not able to run them on high?

these are my system specs

Radeon HD 5870

4GB ddr3 ram

AMD Phenom II X4 965 3.40GHz

Windows 7 64-Bit

It sounds like you got another problem on hand.

Please post a GPUZ report: http://boards.cityofheroes.com/showthread.php?t=219502 -

-

Quote:The guidance groups for both SATA and USB try to operate on a backwards - forwards compatible scheme. What this means is that they try to design devices so that older devices will work with newer hardware, and that newer hardware works with older devices:If the only difference with USB3.0 is speed, then no, I'm not to bothered by it.

Nor SATA 6Gbps.

The only problems I'll have, if I get either and they aren't back compatible with USB 2.0 peripherals or "older" SATA drives.

So, either way is good.

I'm not bothered by SLi or Crossfire.

e.g. : if you have a SATA 1.5 drive it will be read on a SATA 1.5, 3.0, and 6.0 controller. It just will run at at the SATA 1.5 speed.

e.g. : if you have a USB 1.1 controller it will be able to "access" a USB 3.0 device. The USB 3.0 device will just run at the USB 1.1 controller speed.

Kind of a hard question to answer.Quote:je_saist. If you think that list are the only games that may have problems, but said problems. Are those problems you mentioned, big problems. I don't mind not having AA or Crossfire support, but how big a problem is the rendering thing you mentioned, and how prevalent is it amongst games?

Is it something that is actually noticeable most of the time?

In some games, such as Batman Arkham Asylum, Need For Speed: Shift, and Borderlands, the Nvidia-caused problems are fairly noticeable, which is why I reference them the most.

Is that the list of games that have issues? Pretty much. Thing is, I'm not aware of any commercial game released under ATi / AMD's Get in the Game program that was deliberately sabotaged at a code level to run poorly on Nvidia GPU's. I've had no off-the-record or on-the-record comments from any game developers that ATi/AMD has told a developer to insert code to detect Nvidia GPU's and run in a lower graphics mode.

I am directly aware of several on-the-record comments by game developers who released games under Nvidias The Way It's Meant to be Played program that state that Nvidia has withheld advertising money unless the developers met certain conditions: some conditions which have included the stunts I have outlined above. Some of these statements have been made to my face during various events at previous E3 conventions.

Now, is it a big enough problem to actually impact the playing of your game? Well, depends on the game.

I can not flat out tell you that it is a big problem, or that it is one that is absolutely noticeable.

Things like broken AA, or lower shader modes of operation (say forcing DX9 when a card supports DX10), are simple things to get away with since you might not ever notice a difference unless you are doing a side-by-side comparison.

Just look at the people here on these forums who have been running Geforce 7900's in high resolution with hot Windows Titles not realizing that they were running in lower shader modes. We had quite a few who went "but Crysis works fine on my 7900!" when they tried to run UltraMode in CoH and found themselves unable to leverage the new graphics mode. If you don't have a comparison point for what the image is supposed to look like, you probably won't notice.

There aren't very many commercially released games that make it to market either either outright rendering sabotage intact. Publishers really dislike angry clients calling on the phone. The last that I'm aware of was Borderlands, and Gearbox got around to correcting the game-code issue on Windows Xp with Radeon cards. -

Quote:Little bit more on the Gnu Image Manipulation Program. A couple years back one of the guys from Attack of the Show created a hack of the GIMP's ui to resemble photoshop: http://www.gimpshop.com/The version of Microsoft Paint (mspaint) in Windows 7 is actually fairly decent (although cropping still sucks).

If you want more, though, you might want to check out the Gimp (yeah, wonderful name, huh?) and Paint.Net. I've never used Paint.Net, but have heard wonderful things about it. I've used the Gimp a fair bit, and it's UI is fairly similar to Photoshop (in the most primitive, crappiest sense of the word), but it's pretty feature packed for something free (and Free).

The hack is quite old now having been based off of GIMP 2.1 and 2.2.

That hasn't been the only effort to make GIMP more usable to the average user. There's also been GimPhoto: http://www.gimphoto.com/ Gimphoto is also out of date having been built against GIMP 2.4.

One of the reasons these hacks haven't really been updated is due to the GIMP developers trying to rework the interface. GIMP 2.6 was a huge step forward in terms of usability over 2.4.

That interface will change yet again in GIMP 2.8. Some of those changes can be tried now in the developmental branch: GIMP 2.7; http://www.gimp.org/release-notes/gimp-2.7.html

As far as I am aware though there is no binary build of GIMP 2.7 for windows yet. Theoretically one should be able to compile the source code and get it to run... but whether or not it will run well is just a guess. -

-

eep! *ISH GOOSED*

*glares at Shecky*

You utter pillock... -

Quote:well... easiest way to figure this out is to go here: http://www.nzone.com/object/nzone_tw...gameslist.htmlThe site I use doesn't have your mobo that you suggest and I'm still unsure as to what graphics card I should get... I realize the ATi cards are better (and cheaper) at the moment, but I still worry about compatibility issues with games and the like... I like things to "just work" y'know

If a game is on that list, there's a small chance it will have broken Anti-Aliasing; broken Crossfire support; or will intentionally use a lower rendering path on ATi hardware than the rendering path the ATi hardware supports. Sometimes it will be all three.

That being said, I'm unaware of any games under Nvidia's program that won't work at all on ATi hardware. -

Quote:I think I know what you are getting at here Ouch Grouch. If I understand this you want to know why the developers do not use a system like Playstation Home where trailers and movies are streamed live to the player.the game has a few movie thether why can the developers not use those to show off new updates and old updates +

content from comic con

The short version is: the engine doesn't work like that.

When the processing engine was built back in the early years of 2002 / 2003 there wasn't much thought on Redirected Rendering or Indirect rendering. Sony's Playstation Home leverages technologies derived from Indirect and Redirected rendering to stream and display movie trailers in real time.

From a development point an in-game solution isn't as simple as setting a player visible view-port and directing a video feed to that player-visible view-port. Keep in mind that when Half-Life 2 launched in 2004 the ability of the Source-Engine to display viewpoints pointing to real-time renders of other events was considered quite an achievement. That was with a game designed for a single-player experience, not an MMO experience.

Just to go over a couple of quick issues, there's the matter of video coding and decoding. What codec to the developers use? Well, the answer to anybody familiar with video-codecs today is going to be: Ogg Theora or VP8. Both are royalty-free open-sourced codecs, which means the Paragon Study developers could implement the video codecs without facing a massive financial burden to license a competing codec, such as H.264.

Of the two codecs VP8 is considered to offer a higher video quality, and at least through FFMPEG is pretty fast even on "weak x86" such as Intel Atom. Of course, then there's the problem of actually exposing the video through the game.

Just a quick experiment here: fire up something like Big Buck Bunny in 1080p on your computer.

Now, launch City of Heroes.

Is CoH playable?

Depending on the hardware under your hood, your computer might be helpless unless you offload the decoding of the video to your graphics card. Of course, if you are off-loading the video to your graphics card... well... what exactly do you THINK will happen when you try to run a graphics intensive game on that graphics card?

One of the reasons why Playstation Home can get away with streaming videos in real-time is that the Home developers only have to worry about one hardware specification. They only have to worry about one performance profile. They know, for a fact, what your system will perform like. Sony doesn't have to account for somebody that might only have a 3-SPE Cell processor or a Cell Processor at 1.2ghz.

The Paragon City Developers have to account for the players who are still running Radeon 8500's, Geforce 4's, and Intel Graphics Accelerators. Attempting to stream a movie through the game would likely wreck most of these low-end computers.

Of course the counter to this is that well only players who have the hardware can enter the theater, so player beware.

Put yourself in the developers shoes. Do you really want to tell some of your player-base that you just implemented this cool-feature in game... but they can't have it because their hardware is too old, or their performance is too low?

Speaking for myself, I just saw what happened when updates to the graphics engine under Going Rogue knocked the living daylights out of Intel Graphic Accelerator support. I think such a more open and public move against lower-end hardware would make the Intel Firestorm seem like the Care-Bears Family Reunion.

Hopefully this gives you an insight into why City of Heroes doesn't have a function to stream video through the game, and why such support likely won't ever be implemented in the current game. -

Quote:I... think you asked if a card in the $200-$300 price bracket would be worth the cost..Is the a sub 300 card that would be better than the 2 you suggested or the extra dough wouldn't deliver the performance to warrant it?

well. On the Nvidia side. No. Your 600watt power supply isn't going to be enough to drive the GTX 470. I had one through here a couple weeks ago and I pretty much had to use one of my 850-watt power supply to drive it for full clocks. The average street price on a 470 is also still over $300, although manufacturers are now starting to offer mail-in-rebates. You could pick up a good Evga model for around $305 if you shop carefully.

On the ATi side... yes.

You can pick up a RadeonHD 5850 with no tricks, no mail-in-rebates, for under $300. What's more, you can find a good brand, such as XFX for these prices.

Now, I'd recommend the ATi card for several reasons... least of which being OpenGL support. According to posts on Nvidia's own Nzone forums Nvidia has been pulling back on OpenGL support. Since there's already been a couple of long-drawn out beat-downs on this subject, here's the short version:

Microsoft's proprietary Graphics API is known as DirectX. DirectX 10 was developed on Windows NT5, but only released on Windows NT6. This means the last version of DirectX Windows NT5 supports is DirectX 9.

The Industry standard Graphics API is OpenGL which is overseen by an organization called Khronos. Various versions of OpenGL can be roughly matched to various versions of DirectX:

- DirectX 09 ~ OpenGL 2.0 ES

- DirectX 10 ~ OpenGL 3.x

- DirectX 11 ~ OpenGL 4.x

If you are using Windows Xp you can STILL take advantage of OpenGL.

What this means is that games using the OpenGL API will give you the same visual imagine on Windows 7 as they give you on Windows Xp on the same hardware.

Games using the DirectX API will give you a different visual image on Windows 7 as they give you on Windows Xp with the same hardware.

So, Nvidia pulling back from OpenGL is, well, discouraging. Basically a flip-flop of the past... well... years really.

Then there's the power issue. a 5850 will run at full clocks on a 600 watt processor. So you'll get the full performance of the card.

Then there's also the heat issue. Nvidia actually has a Fermi-Certified Case program, which is kind of required as both the GTX 470 and 480 have been recorded topping 200 degrees Fahrenheit. You need some serious cooling to run a 470 or 480.

The 5850? Thermal-wise it's not that far off the pace of a 4870. In most cases it'll put-off less heat than a 4870 if you grab one of the ducted fan cards.

Are either a really big jump over the GTX 460 or RadeonHD 5830?

Honestly, if you've been running an 8600 in the games you mentioned with all details enabled my guess is that you've got screen resolution of 1440*900 or lower.

In these resolutions... no.

you'd have to be pushing 1920*1200 or higher before the 5850 separates itself from the 5830. -

There's lots of stuff in the sub $200 market that will outclass an 8600. There's actually stuff in the sub $100 market that will outclass an 8600 now as both the RadeonHD 4850 and Geforce GTS 250 can be had for around the $100 mark.

If you have $200 to spend your choices are pretty much the RadeonHD 5830 or the Nvidia GTX 460.

Unless you have over-riding motives, such as Linux support, corporate ethics, or... well... just look up Nvidia + my username in advance search to get an idea... either card will pretty much rock anything you can throw at them. -

-