CoH on Multi-GPU systems- benchmarks

Thanks for testing and posting this.

It confirms something that many of the old-timers have been saying for awhile, but it's good to have visual proof for the newer players.

I agree with you in regards to GR and the possible performance impact, should the Ultra-Mode be heavily shader-dependant, and I have an extremely strong suspicion that this will in fact be the case.

All of the features that have been showcased thus far seem to point in that direction, which is a good thing IMO.

|

Buying Nvidia for PhysX support won't do you a lot of good. You'll actually need a PhysX PPU card, and they are no longer sold new. They are not that expensive used though.

|

Have I mentioned I think this is a counterproductive business strategy they are following?

Generally, SLi and Crossfire will show an advantage when the CPU is waiting on the GPU to finish the current frame before it can start another. The longer the wait, the greater the impact. If a single GPU is fast enough so the CPU wait is short enough, there should be little if any difference in performance.

There wasn't a lot of difference in the XP/Phenom 9600/HD4850 and a bit of difference in the Vista/Phenom 9600/HD4850 (around sample 13 through 18). An improvement in that range also showed up in the Win 7/i7-920/HD3870 graphs and the Vista/A64-6000/GTS250 graphs.

Whatever happens during that part of your test really strains the GPU. I don't see anything in your Youtube clip that looked out of the ordinary, perhaps just a lot of objects in the view coupled with a worse case arrangement of those objects during that segment.

Father Xmas - Level 50 Ice/Ice Tanker - Victory

$725 and $1350 parts lists --- My guide to computer components

Tempus unum hominem manet

Yay! Thank you!

no physX? =(

I hope they get that going soon...

|

Generally, SLi and Crossfire will show an advantage when the CPU is waiting on the GPU to finish the current frame before it can start another. The longer the wait, the greater the impact. If a single GPU is fast enough so the CPU wait is short enough, there should be little if any difference in performance.

|

That's... not quite how the X.org driver developers have explained multi-gpu support in the past. That's also not quite right with how at least two of the modes of known Crossfire / SLI support work.

It sounds about right for Alternate Frame rendering where each GPU renders one frame of the picture at time.

Referencing NeoSeeker for the names: http://www.neoseeker.com/Articles/Ha...iretech/2.html

There's also Supertiling, which unless something has changed, wasn't working with OpenGl... so it wouldn't work with City of Heroes, and Scissor Frame Rendering, where the display is calculated so that the GPU's share an equivalent processing mode.

Given the benchmark results I observed, it's safe to say that City of Heroes isn't leveraging Supertiling or Scissor Frame Rendering, which should have given some kind of boost to the frame rate.

I recall Anand doing a test on PhysX and CoH. He found that while adding a PhysX card can improve performance the same level as going from a single-core to a dual-core, this was only true on the Very High setting, which was indistinguishable from the much less demanding High setting.

In short, it ain't worth worrying about.

|

Given the benchmark results I observed, it's safe to say that City of Heroes isn't leveraging Supertiling or Scissor Frame Rendering, which should have given some kind of boost to the frame rate.

|

Both companies tend towards alternate frame rendering these days.

Sadly, if an OpenGL game doesn't have a Catalyst AI profile, it's impossible to use AFR on it; instead, it will default to scissor. If it's Direct 3D, you can force what you like. At least, that was true a year ago, I haven't kept up on Catalyst development. What I do know is that SLI and CrossFire seem very well matched, with both working the best on an i7 platform.

EDIT: I has it! http://www.anandtech.com/video/showdoc.aspx?i=2828

Necrobond - 50 BS/Inv Scrapper made in I1

Rickar - 50 Bots/FF Mastermind

Anti-Muon - 42 Warshade

Ivory Sicarius - 45 Crab Spider

Aber ja, nat�rlich Hans nass ist, er steht unter einem Wasserfall.

Yes, I'm thinking AFR which is what I believe the nVidia SLi profile is for this game. No idea what if any profile ATI uses.

Father Xmas - Level 50 Ice/Ice Tanker - Victory

$725 and $1350 parts lists --- My guide to computer components

Tempus unum hominem manet

|

***

So, a couple things to take away from even this short test. Multiple-GPU's don't do a whole lot of good in City of Heroes right now. High-GPU's also don't do a whole lot of good either. They simply can't put their power down in what looks to be a texture reliant engine. |

2. I just bought a used PC from a friend at work:

Model: GM5474 with an AMD Athlon™64 X2 6000+, 64-bit dual core processor

Link: http://reviews.cnet.com/desktops/gat...-32574845.html

I'm running Vista 32 and 2GB of ram.

3. I just want the game to run excellent on this machine. I was considering upgrading the following:

A. Power supply

B. Nvidia card

C. Adding an additional 2GB of Ram ( I have 4 slots!!!)

4. Your post leads me to think upgrading will have no effect, can I get your opinion?

|

So, a couple things to take away from even this short test.

Multiple-GPU's don't do a whole lot of good in City of Heroes right now. High-GPU's also don't do a whole lot of good either. They simply can't put their power down in what looks to be a texture reliant engine. |

Here are the specs for the card...

Link: http://reviews.cnet.com/networking-a...=mncolBtm;rnav

|

I think this is overkill, but how about a Killer Nic card for eliminating rubber banding...or is the bottle neck server side?

Here are the specs for the card... Link: http://reviews.cnet.com/networking-a...=mncolBtm;rnav |

CoH can be played on a 56k modem, so if you've got lag, it's generally due to one of two reasons.

A: Slow connection between the network connection you are on, and the connection that the CoH server is on. This is the typical rubber-banding lag.

B: Server Processing Lag. This is the lag you get in Hamidon Raids, Ship Raids, or in the ITF's 3rd mission, where the server just can't process and return all of the data to all of the teammates in time.

One of the other problems is that your own personal network controller is already several times faster than an average cable or DSL connection.

Case in point can be found here : http://test.lvcm.com/

Cox Communications 4Mbps download speed is far short of 10baseT Ethernet's 10Mbps. Up from 10BaseT you have 100baseTX, then Gigabit, which is a 1000megabit speed. So, in all practical points, your Ethernet card is already several orders of magnitude faster than the average residential cable connection, and still faster than if you were leasing a T3 connection.

Stuff like the killer NIC then, doesn't really do a whole lot of good...

|

1. Thank you for posting this excellent information.

2. I just bought a used PC from a friend at work: Model: GM5474 with an AMD Athlon™64 X2 6000+, 64-bit dual core processor Link: http://reviews.cnet.com/desktops/gat...-32574845.html I'm running Vista 32 and 2GB of ram. 3. I just want the game to run excellent on this machine. I was considering upgrading the following: A. Power supply B. Nvidia card C. Adding an additional 2GB of Ram ( I have 4 slots!!!) 4. Your post leads me to think upgrading will have no effect, can I get your opinion? |

So, no, adding more Ram to the system you bought won't do any good unless you swap to a 64bit base OS.

***

Now, to answer your question on upgrading your graphics card. As I've posted on other parts of this forum (and I'm too lazy to go find it), my opinion of Nvidia's 64bit drivers is somewhere along the lines of my opinion on XGI's Volari Drivers, and the ATi drivers pre-ArtX. *this opinion has been censored by the Vulgarity review board*

Short version is, I've tested across Geforce 6600 GT, 7900 GT KO (Factory Overclock), 9500 GT (+ 2x SLI), and GTS 250 (+ 2x SLI and 3x SLI), across NT6 versions Vista SP1, Vista SP2, Win7, and Linux kernels 2.6.25, 2.6.27, and 2.6.32. In all cases in native games running against OpenGL and DirectX on both platforms, the Nvidia drivers have consistently given texture corruptions, missing polygons, and other broken texture features. The problems have actually been in effect, well, for years.

On the more recent NT6 builds, Nvidia's 64bit drivers have consistently given me lock-ups on titles such as Half-Life 2, Ghostbusters, and Call of Duty 4: Modern Warfare.

These problems, so far, haven't manifested in Nvidia's 32bit builds. Which is why I personally leave my Nvidia systems running on 32bit OS's for production and review purposes.

***

This doesn't mean that upgrading your graphics card won't help, or be an improvement. If you stick to a 32bit base Operating System, Nvidia can deliver a stable and pleasurable experience. You'll just be stuck with the 2gb RAM limit of a 32bit OS.

***

PowerSupply: Upgrading your power supply may, or may not help depending on what you are trying to do. If you are grabbing a hefty card like a GTX 260 or better from Nvidia, or a RadeonHD 4850 or better from ATi, yes, I'd look at a better power supply.

***

edit: oh yeah. another Nvidia moment. Got told that I was using Outdated drivers in a crash... on the 32bit 195.62 drivers. Yeah. I don't think so.

I got asked about PAE and handing 32bit systems more memory even if an application can't use it in a PM.

Here was my response

|

Windows Server supports PAE which allows the 32bit OS to access 4gb or more of memory : http://www.microsoft.com/whdc/system...AE/PAEdrv.mspx Windows Xp also supported PAE, but you had to enable it in the boot.ini : http://www.microsoft.com/whdc/system...AE/PAEmem.mspx Even then, process's are still limited to 2gb, and the OS will only read 3gb. Theoretically, Intel's triple channel setup would make this the fastest 32bit memory configuration, but in actual practice, it doesn't on Windows. If you hand Windows 7 4gigs of Ram, or even 3gigs of ram, it will only use 2.5gb total. Same with Vista 32bit. It won't recognize more than 2.5gb even if you hand it more. I've got a couple of .VDI images I can demonstrate this on, which it sounds like I should. |

This is derived from Posi's thread about which graphics cards would be able to run Ultra Mode and this post by PumBumbler.

Now, I do need to stress that this is by no means an exhaustive in-depth researching of how Windows systems with multiple GPU's run City of Heroes. I only went to one zone, and to keep things simple, used the free version of Fraps to capture screenshots and record the benchmark numbers.

That being said, I went to Cap Au Diable, and some of you might already know just from that where I went in the zone. To give the benchmark some context for what I did to create the numbers, here's a YouTube video of the ninja-run across rooftops in one of the most lagtastic parts of City of Heroes I have ever come across.

http://www.youtube.com/watch?v=zQZmgXtccUc

As a note, no, I didn't use the Demorecord function to create the benchmarks. Each of the runs was done manually, so there is some variation in each run. The resolution of the game was set to 1920*1200 with all details turned up.

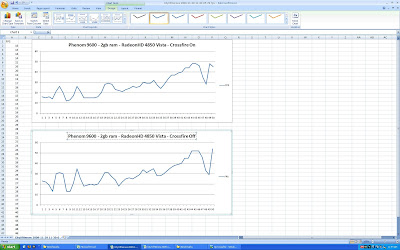

First computer up on the blocks is a Phenom 9600 with Crossfired 4850's running Windows Xp.

I did two runs, one with Crossfire turned off

And one with Crossfire turned on

And then the results:

Scaling was pretty flat through the run. Crossfire support had no impact at all on the frame count.

***

I swapped to Windows 7 on the same hardware

And while I remembered to take a picture at the start of the Crossfire run, I forgot to take one for the Crossfire turned off run.

The results were fairly predictable with Crossfire having no real impact on the game.

***

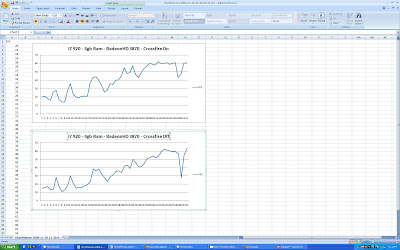

Next up was another Crossfire system mixing a bit of old with a bit of new. My older RadeonHD 3870's coupled against an I7 920.

And the Crossfire mode setting on

and off:

And the results...

This is where things to start to get really interesting on the scaling of CoH's performance. Now I know, and even HardOCP backs this up, the 4850 is more powerful than the 3870x2.

Okay, yes, the 3870's I have were backed up by an I7 920 with a higher clock speed than the Phenom and more memory... but City of Heroes is a 32bit application. Handing it more than 2gb of memory pretty much does nothing, and the scaling was pretty much identical across Windows 7 and Xp... so it's not like the copy running on the 4850's was starved for memory.

The answer is actually one of my concerns for Going Rogue. The theoretical Megatexel performance of the 3870 is 12,400 MTexels. The 4850 has a theoretical fill rate of 25,000 MTexels. However, the MegaPixel fill rate on the 3870 is 12,400 MPixels, while the RadeonHD 4850 only has a 10,000 MPixel fill rate. From the quick benchmark I did, it seems that City of Heroes is bound to texture performance more than anything else.

OKay, the RadeonHD 57xx series starts at a theoretical rate over 16,000 MPixels, so it should be faster in the current game engine, but there's still the feeling that a modern graphics card is just spinning itself over, unable to bring it's full power to bear. If Going Rogue is more Shader dependent than Texture dependent, we should actually see framerates skyrocket on certain graphics cards.

***

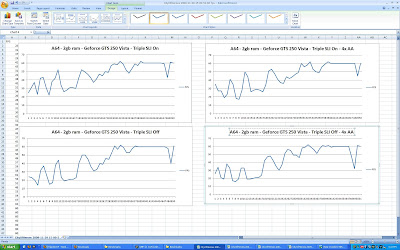

Now, over to Nvidia for the Triple SLI GTS 250 system coupled with an Athlon64 6000. This system is coupled against a smaller LCD panel, a 1680*1050 screen, so the benchmark results aren't directly comparable to the results on the previous systems.

Since I was running in a lower resolution compared to the other systems, and I was running on Nvidia cards, I did a total of 4 runs. Two with Anti-Aliasing enabled in the game, and two with Anti-Aliasing turned off.

GTS 250 SLI On

Now, before I go further, there is an interesting note here that will probably halt several players from purchasing Nvidia cards for a specific feature.

Note that although I'm using a Platform with Nvidia PhysX support... City of Heroes does not recognize PhysX On Nvidia Cards.

So, when I turned SLI off, I made sure to leave PhysX on... just to see what would happen.

GTS 250 SLI Off

GTS 250 SLI On 4x AA

GTS 250 SLI Off 4x AA

Then the results

Once again we see the same thing as the Radeon cards. The GTS 250 was spinning it's wheels on the 11,000 MPixel theoretical fill rate. Anti-Aliasing did little to change the overall performance through that section of City of Heroes in that resolution. Which is actually why I put the GTS 250's on a lower resolution screen since; A: RadeonHD 4850 Crossfire put's a dent in Geforce GTX 260 SLI; and B: most of the games I play don't optimize for multiple GPU cores.

***

So, a couple things to take away from even this short test.

Multiple-GPU's don't do a whole lot of good in City of Heroes right now.

High-GPU's also don't do a whole lot of good either. They simply can't put their power down in what looks to be a texture reliant engine.

Buying Nvidia for PhysX support won't do you a lot of good. You'll actually need a PhysX PPU card, and they are no longer sold new. They are not that expensive used though.

I think the lack of Nvidia PhysX support might actually be an indication that the developers are spending time converting / upgrading the engine to use OpenCL instead.