-

Posts

4197 -

Joined

-

Quote:As long as Steve Martin stays FAR away from it.I don't know. "Ask Jeeves" a murder mystery movie involving a clueless detective and the internet.

-

Quote:On paper... no.If a mind/ dom can finish an LSF solo, can he do the same with the STF?

The big problem is Lord Recluse and the towers which super-buff him. From Paragonwiki: http://paragonwiki.com/wiki/STF

Basically, with these buffs, on top of Lord Recluse's status as a Level 54 Archvillain, soloing is numerically impossible. For starters, Lord Recluse's Base To-Hit on top of the To-Hit advantage from just being an Archvillain, never-minding a level 54 archvillain, means he will hit through defenses that approach 2x soft-cap: e.g. he's hit through 90% defense.Quote:Red: Status Effect Bonus(Knockback, Disorient), +105% DMG(Smashing, Lethal, Energy), +105% Res(Smashing, Lethal, Energy) (hardcapped at 100%)

Orange: +200% DEF(All) (hardcapped at 175%), +17.7 Protection(Hold, Immobilize, Disorient)

Green: Healing, +MaxHP (hardcapped at 150% or 46015.73 hp), +2000% Regen

Blue: +30% ToHit, +1000% Recharge (hardcapped at 400%), +200% Run Speed

Also, his damage is super-buffed, and even if you have capped 90% resists on tank, he'll still hit like a freight train.

On paper the only mez affect that might work is sleep, since it's not covered by the Purple Triangles. As Khalan brings up, there is a question on whether or not you can do anything with a build that can perma-sleep a level 54 archvillain.

In the LRSF you can confuse a level 53 Hero to attack the other enemies. In STF Lord Recluse will only spawn when the 4 Patron Archvillains are down. Neither the Flyer, the flyers spawned pets, or the Lord Reclused Summoned Pets, can actually do any meaningful damage to Lord Recluse with towers up, and it's questionable whether or not they could touch him with the towers down..

It's also unlikely, although I've never tried it, that you could direct confused summons from Recluse, confused summons from the flier, or the Flier itself to all focus on a single tower to begin with.

Without at least the damage tower down, there's no way any archtype can really sustain a fight against Lord Recluse. -

-

Quote:any monitor "should do"I will very shortly have a brand new computer with an ATI Radeon HD 5850 video card. I would like to use a dual monitor set up, with one monitor for gaming, and another for web browsing / watching video. What 20" to 22" monitor would you recommend for such a set up?

Personally I would point you in the direction of the Acer brand right now. -

Quote:Lillika: That's... that's actually... Okay. The only way I'm going to counter this thought since I can actually see a large number of players believing it is to do what I didn't want to do, and spell the problem out.Awesome.... so all we have to look forward to is more proliferation because anything else would mean work. How boring.

One of the factoids buried somewhere in the (I think completely gone) Issue 17 closed beta forums was Castle's post on the amount of time it took to actually do the whip set. According to Castle the amount of time it took to just animate one whip attack, one single attack, was equivalent to the amount of time it would take to animate another 2 or 3 other power sets. That is 18 to 27 different separate completely new power animations for new 9 tier power sets with no reused animations.

The developer Synapse later verified that the task of animating whips as used by demon summoners was best described as monumental. Castle again commented on the difference in a thread in the public forums: http://boards.cityofheroes.com/showp...&postcount=148

There is no question of no new unique power sets because they take work. There is a is a question of no new WHIP powers because they take more far more work than other powers. Even then, Castle did not say never. It is likely that animated and completing a single whip power, now, would take as much work as only a single nine-tier powerset. That's still a large amount of time to be working on a single power when other new sets could be worked on instead.Quote:It's (obviously) not impossible, but it has been EXTREMELY time consuming getting it to work. I believe the Dual Pistols animations took about 1/5th of the time that the Whip attacks have taken...and there were more animations required in that set!

Now I can't find the direct post, only references to it, so I think it was in a Closed Beta section as well, but the developers have been asked if there was anything more difficult than whips. I want to say David / Noble Savage responded with something to the effect of yes, there is one power-set design off the top of his head that was going to be more difficult and time-consuming than whips: Two-Handed Staffs / Lances -

http://boards.cityofheroes.com/showp...2&postcount=13

Quote:Unlikely. The 3 attacks that we have now for MM's took up far too much time in terms of development and, while we could likely reduce that somewhat now that we know what we are doing, it would also require 3-4 more animations to be made.

So, while I'm not saying "Never" the odds are against it. -

Quote:Don't forget: http://wiki.cohtitan.com/wiki/Hit_Points

-

Quote:First, there are two types of defense. There is positional, which determines where an attack came from: ranged, melee, and AOE. Then there is Typed. These are the kinds of attacks: Smashing, Lethal, Fire, Cold, Energy, Negative Energy, and Psionic defense.Looking for a little advice. I have been reading around the forums and working with MIDs to try out all sorts of different ATs. I like scrappers for their solo-friendly playstyle, and the focus always seems to be to cap s/l def.

Second: A soft-capped defense is one that has reached a value of 45% defense or greater.

The focus on Smash / Lethal defense is for two reasons. The first reason is because Smash / Lethal attacks are the most common types of attacks used by enemies. I think the hard-number is somewhere around 70% of all in-game NPC attacks have a Smashing or Lethal component.

The second reason is that most melee defense sets have components to protect against Smashing / Lethal damage. It is easier to get these sets to the 45% defense soft-cap since you'll have to use far less sets than on a build that is starting from 0% defense.

Only one Tank Set can soft-cap to Typed Defense on it's own without IO boosts: Ice Armor.Quote:I look at the builds for tanks and see that aoe/ranged/melee is capped but s/l is not (in the ones I looked at - shield def/ele).

All other Melee defense sets cannot achieve a 45% soft-cap on their native sustainable powers. All other melee sets need external buffs, either from team-mates, or from IO's, in order to reach the 45% defense soft-cap.

Very few sets with defense components have Positional defense. Positional Defense is more valuable than Typed Defense since it only matters how the the attack was delivered.Quote:Can someone explain how the different attributes work and why scrappers would cap s/l but tanks would not?

Thanks.

For example: a scrapper, brute, stalker, and tank fight in melee range, and most attacks launched at them will be melee. If they have a 45% or greater melee defense, a greater number of attacks will just never land.

A Blaster, Corruptor, or Defender normally fight from range. If they have 45% or greater ranged defense, nearly every attack thrown their way will miss.

Because positional defense is more valuable, more of the high-end builds focus on driving positional values as defense solutions. -

Quote:Devs said the old Calvin Scott would be making it's way to Ouroborus eventually. No date has been set on when it will be added.Seeing as how a certain contact in Going Rogue doesn't exist in the gameworld and can only be contacted remotely, that technology can be used with adding Calvin Scott's TF to Ouroboros, right?

I suspect that Oneirohero is on the right track... we'll probably see the old Calvin Scott make it into Ouroborus at the same time we get a "mirrored" event in Praetoria. -

Quote:I'm not sure if this is a serious question or a not so serious question... so forgive me if this is a joke and I missed it.Ever notice the cars driving in the streets are from like 40 years ago?

does COH take place in the 1970s?

The basic reason the cars in CoH look old is founded in two primary reasons.

The first one was networking performance. You can kind of see the network data transmission is everything mantra in other games from the time, such as Dark Age of Camelot or Planetside. The visual styles of MMO's from the 2002-2004 release cycle largely centers on large static worlds with very few, if any, in game objects that move and react to the players. The idea was to cut down on the amount of information that needed to be fed back and forth between the server and the player. Player interactions with cars were both limited, as well as the cars themselves, to save on network bandwidth.

The second reason is a little bit more legal. From the plethora of car games out there, you might not know that many car manufacturers tend to look negatively upon images of their cars being reproduced without their permission. CoH did not contain look-alikes of "modern" cars so they wouldn't have to pay licensing fees.

Okay, that was 6 years ago.

Today? I really don't know why the cars haven't been updated or given a revamp. My guess is that on the list of art assets to be looked at, replacing the cars has been a distinct last place feature. I don't know if Noble Savage and crew have plans to update the cars with their own designs or not now that Going Rogue is out the door. -

Quote:+repThe geom.pigg problem is an old one. It used to create a "debug assertion failed" error. Now, it just puts you into a looping hell.

The solution is the same as it has always been, as per http://downloads.transgaming.com/fil...easenotes.html

On edit, a couple of notes:

While you're changing the middle number, you'll want to use the first and third numbers you see from your OWN cat command, reinserting them with the echo command.

When you shut your machine down, the new number you enter will be lost, and you'll have to go through this process again if you need or wish to download in the future. However, the numbers your machine assigns to the tcp_wmem file come from a *different* file, which you can edit with the updated TCP send window size. I'm afraid I don't remember which file that is, off the top of my head, but it's available via websearch. ^.^;

W4E

I feel ashamed that I didn't already know this fix. -

Hera: I have to disagree with you categorically, on every single point. Kinetic Melee has been judged by me to be frigging awesome for tanks.

Coupled with the new gauntlet target resistance debuff, Kinetic Melee on tanks delivers a powerful single target punch, a benefit that extends to Energy Melee. Kinetic Melee also features decent mitigation through knockdown, as well as excellent mitigation through the native damage debuff. Resistance based armors, such as Fire, Dark, and Electrical Armors, benefit the most from Kinetic Melee as the damage debuff component has the equivalent effect of increasing resistance values.

The damage debuff doesn't work as well for the entirely defense based Ice Armor set, since Ice Armor doesn't get any meaningful resistance values to begin with. Knocking 7% damage off an attack isn't going to mean much in the long run. Nor does the kinetic melee set really work with Stone Armor, but that's more down to the speed penalty in using granite, and the set's basis as a defensive set outside of granite.

Mixed resist / defense tanks, such as Shield, Invuln, and Willpower, don't see as great a benefit either from the set's damage debuff components, although that is more due to these sets generally being the strongest against SO and light IO usage-builds.

One of the perception problems with Kinetic Melee is that it is a slow activating set. It's got a long-wind up and then a sudden quick slam for most of the animations. Players who get hung up on the visual presentation, and can't separate the mechanics and timings of the power from the visual presentation, probably will find Kinetic Melee to be more annoying than Energy Melee's animations.

Should somebody whose making a tank for the first time check out Kinetic Melee?

Oh yes. It's a good solid choice for non defense-based tanks, again largely due to the damage debuff, and works well with SO's.

High-usage IO builds probably want to focus on driving recharge times and accuracy, since Power Siphon is crucial to pulling the most out of the set's damage capabilities. -

Quote:again. No.Surely you could just roll up a new Praetorian and straightaway go to the options menu, and check out the colour values there and make a note of them?

I'd do it myself and post the numbers, but my own PC's off-limits right now, hence me even being here...

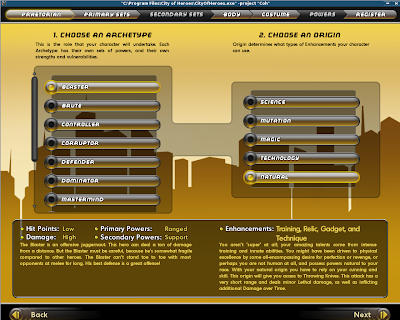

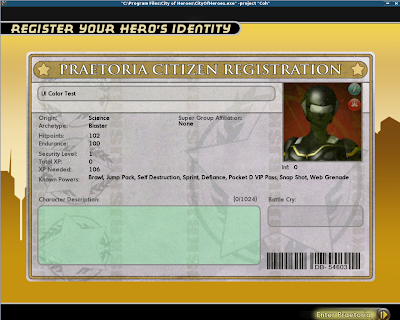

This is the color scheme the UI switches to when making a Praetorian Character.

This is the resulting User Interface Color scheme:

This interface color has been the default for... well... since I first started taking screenshots against Praetoria working on Cedega Maudite engines:

Whenever you back out of the Praetorian character creation system and create a Hero or a Villain, the default color scheme for each creation changes automatically.

Hero Default

Hero Default UI

Villain Default

Villain Default UI

Elements of each Side's main color theme are still present in some windows, such as the character identify window:

-

Quote:Well. I'd be pointing you to a new video card if you want to run Ultra-Mode.It's a LCD monitor by Gateway (FPD2275W-LCD-TFT-22" widescreen-1680x1050)....

I have played on and off for several years now and have never had a problem. Maybe it's time for a new video card?

Thanks again for your time!

If you aren't interested in Ultra-Mode, a Geforce 7 isn't in any danger of being retired from driver support for probably another year or so.

My gut reaction is that this is an over-scan problem. The system, or the monitor, is sending or reading a signal from your computer and is trying to scale.

If you don't mind continuing to troubleshoot this particular problem, this Gateway monitor is listed as having both DVI and VGA cable inputs. If you are able to swap cables, this would help narrow down the potential problem.

if you are running through a VGA cable now, swap to a DVI cable. See if the enlarged screen continues.

If you are running through a DVI cable now, swap to a VGA cable. Check for the enlarged screen.

If you are getting the enlarged screen on one input, but not the other, there's the problem.

If you get it on both inputs, the next step is to try a different graphics card, or a different monitor. -

Try looking under Technical Issues & Bugs in the Development Forum: http://boards.cityofheroes.com/forumdisplay.php?f=578

Read the stickies there too

http://boards.cityofheroes.com/showthread.php?t=219502

http://boards.cityofheroes.com/showthread.php?t=231628 -

Quote:That would be the default user-interface color for creating a Praetorian. You will only get that color scheme if you are creating a Praetorian.Hi,

Not talking about a costume part, but about the golden user-interface color...

Thanks anyway.

If you create a Hero or a Villain the user interface will swap to their native Red and Blue pallets. -

-

When you first start up City of Heroes, there's a little box on the License Agreement labeled SAFE MODE:

Put a check in that box the next time you start the game up.

This will force the game into it's lowest resolution mode.

From there you should be able to log in, go to your options, and set the same resolution for the game that you have for your monitor. -

Quote:I wouldn't go... that far yet.Well that is lame, so I'd be better off just buying a better graphics card then getting two of the same?

Television has been playing around with Multi-GPU rendering modes on the backend of the game. There already have been some substantial visual performance gains from the SLI test back during I17 Beta. E.G. I was able to run medium ultra-mode settings on SLI'd GTS 250's.

Warning Tangent: You can still force AFR SLI now by using tools like nHancer and modifying Hex values. This approach isn't really recommended, even though it's not as hazardous as messing with .dll's directly, messing with the hex values can cause some nasty damage to your system. I don't mean damage like memory corruption resulting in having to reload stored data. I mean physical damage such as one GPU doing too much work and not obeying a load balancer. It is possible when mucking about with the functions of multi-gpu rigs to send one GPU's usage skyhigh and give it too much work, and burning out. It's also possible to electrically burn out the SLI bridge.

Getting back to the original question: is it better to go single gpu now than buy with multi-gpu in mind? I don't know how to answer that:

Just take a moment and browse through some of the posts here on these forums, and those on the Starcraft II forums. You have people out there who honestly believe that the software application can drive a GPU into an overheat mode. Yes, badly written software can overheat a GPU. Case in point, Nvidia's software driver that disabled all thermal controls.

For the most part, overheating is not a a software problem. It is a hardware problem. All external graphics cards are built to run at specified clock speeds, and each is theoretically built to exhaust a certain amount of heat. If your graphics card is, gasp, overheating: either you don't have enough case heating; the graphics card wasn't built properly in the first place; or you've been messing with how the hardware works. Mucking about with the load-balancers in multi-gpu rigs and forcing different methods of pushing the graphics cards can result in permanent physical damage to the computer.

This is why Crossfire and SLI profiles are such big deals from Nvidia and ATI. If they put out a profile, you have a certain amount of trust that they've actually tried that profile out... and if any hardware damage results from using their profile... you have somebody's throat you can go wrap your hands around.

I don't know what the time-table looks like for AMD or Nvidia to deliver reliable multi-gpu support with this game. My suspicion is that we'll see AMD deliver support first, since, well, beyond being a graphics partner, they've been much more involved with OpenGL as of late.

I don't know what other games you play. Some benefit drastically from Multi-GPU support. Case in point, GTX 460 2X SLI. In games that can leverage multi-gpu setups, the GTX 460 can shoot-past the RadeonHD 5870 2X Crossfire.

There is no garentee that any game you pick up and play is going to be capable of, or will, leverage a multi-gpu rig. If you want the most guaranteed performance, buy a single card.

Warning - Tangent:Going back to the 460. Yes, it does actually outrun 5870's in 2X Multi-gpu modes on supported games. You would kind of hope that a mid-range part released 10months after a competing high end product would have that kind of performance equalization.

I mean, the RadeonHD 2900 and RadeonHD 3870 were separated by only, something like a 5month window. In May 2007 2900 launched at a $400 price-point. In November 2007 the $180 RadeonHD 3850 came along and promptly put the card that sold for over twice as much in a rubbish bin.

The RadeonHD 3870 and the RadeonHD 4850 were separated by an 8 month window. The 3870 launched with a $220 MSRP in November 2007. In June 2008 the RadeonHD 4850 launched at an MSPR of $200 and had no problems doubling the performance.

The fact that the GTX 460, despite being a mid-range part on a fresh new chip spin from the fab, struggles to best the competitors binned filler card from 5 months prior in single GPU tasks? That's worrying. Honestly, if I was an Nvidia fan, which I'm not, I'd be panicking given that the competitor has said they won't be doing a product refresh... they are just going to go straight to their next generation of chip design. -

Quote:Are you... seriously... wanting a response to that question? I mean, I can give one.Well.. that figures. I don't see how it would break the game. They should just limit how can use the teleporter's.

The Database Does Not Work Like That.

For starters, Supergroup and Villain-group bases are not exposed to the player in the same way that other maps are exposed. Bases are a hybrid of an instanced map, a zone map, and have other odd attributes, such as the exposure of controls to modify and manipulate the map.

In Zone maps, the map is always on. Even if nobody is in the zone, the map itself is still loaded, and events still occur inside the zone. Which is why events like Zombie attacks or Giant Monster assaults can occur in zones that don't have any players logged in.

In the base map, the map is not always on. The base-map is loaded and given an instance identification whenever somebody access's it. Now, this may have changed, but I'm pretty sure it hasn't.

Exposing base maps as instances allow the developers to save on real-time processing needs. If there is nobody in a base, there's no reason for the base to be loaded onto a server.

The hybrid nature of the SuperGroup / Villaingroup base is just one of the many obstacles that stand in the way of spawning them as a Tourist capable zone. There's many other legacy code issues, such as the status of destructible objects which are flagged to be friendly to Hero's / Vigilantes in SG bases, but would be valid targets for Villains and Rogues.

No, these are not insurmountable problems to address. They are, however, time consuming problems, and it's only now with Going Rogue actually having launched that Paragon Studios might have the development resources to tackle the legacy database issues. Even then, updating Bases and everything that can be done with bases to recognize Faction differences is probably a good 12month to 16month project.

A: wrong terminology.Quote:Perhaps they should at least fix the game so it's doesn't say that you've gotten a beacon when there is not one.

Thanks anyways.

B: This is actually related to the original problem: The database doesn't work like that, or more preciously, limitation based on how the database currently works.

The system largely works on an automated trigger, where certain actions automatically result in a certain event. This comes down to a code-simplification problem. As I understand how the system works; and if I'm wrong Tele will likely chew me out later; the system basically does a table look-up. The database system checks to see how many badges you have earned, then when you meet certain requirements in the table, an automated script is run that performs the on screen unlocks.

Determining whether or not you are eligible for those benefits to begin with would require an additional lookup. Your information and status has to be sent to the server for every action that you do, and a response has to be generated in relatively real-time. One additional look-up operation isn't a big deal. Now ask yourself this question: how many people play the game? How many different characters will be capable of generating the circumstances you created.

One single operation suddenly just became thousands of operations. Now we are talking not just one or two kilobytes of data to and from the network, we are looking at gigabytes of information to be taken in, stored, analyzed, and manipulated... for each player.

This additional character look-up would have to be added to every situation where a Tourist faction could gain a reward that they are not eligible for. Now the solution has gone from one or two lines of code to several lines of code for every single situation.

Rather, the developers current solution, which basically consists of a read / write permissions check after the action has been accomplished, saves, time, data, and prevents against code bloat. The scripts and codes don't have to be changed or modified, and the developers can bury the denial of reward on the back-end of the processing, which means that your direct gameplay does not suffer from the increased processing load.

The thing is, you use the wrong terminology. There's nothing for the developers to fix. The code itself is not broken. Your expectation of how the code should work is what is broken.

The developers don't need to fix anything with the code. What would be nice is if the developers were able to either update the code, or rewrite the code, and the resulting database infrastructure. -

Quote:nope. As usual, you would be completely wrong. First: AMD's driver program is a unified driver. They use the same binary driver for both OpenGL and DirectX API support. If you do a check through the AMD support site, you'll find that the binary signature on the the drivers to be the same.COUGH. Wrong link JS.

Those are for the professional gfx cards.

Second: AMD has been working on the OpenGL driver for a while now, and the FirePro driver beta is where the OpenGL driver changes are going to show up first.

http://www.overclock.net/ati-drivers...ta-8-76-a.html

Do us a favor TCS, stay out of the Tech Issue forum.

* * *

For the benefit of people coming along and reading this later, there is a good reason why AMD doesn't certify every binary release of Catalyst as a Workstation Driver. Although they share the same source code, as well as the same binary code, the actual processing on the cards works a bit differently. For background data on the differences several sites, such as Anandtech, address the differences between the types of rendering: http://www.anandtech.com/show/1575

Most commercial games leverage the graphics card for Fast-Pass rendering. What this means is that the graphics card does as much work as possible in one cycle while getting the image as close to the desired result as possible. In fast-pass rendering methods developers often settle for less than perfect rendering results, such as aliasing (jagged edges), or rough textures (no anistropic filtering).

Most commercial rendering packages, such as Maya or Autocad, leverage the graphics card for Accurate-rendering. What this means is that the program doesn't care about managing a certain number of frames per second. The program cares about making the rendered image as accurate as possible, regardless of the amount of time it takes to actually render the scene. Which is why whenever a new Pixar or Dreamworks CGI movie comes out you'll hear about the hundreds of thousands of rendering hours that went into the creation of the content.

In the past Workstation Graphics cards used to be built physically different from Desktop Graphics cards. Names like Maxtrox, 3Dlabs, and SGI ruled the Workstation market.

Starting back with... I want to say ATi on the R200W (Radeon 8500), and Nvidia on the NV10 (original Geforce), these two vendors have largely re-wrapped their desktop chips with higher memory counts, larger bus-widths, and put them on the market as professional graphics cards. A few years back, both vendors started to unify their driver teams, using the same source code for the same binary driver release, and yes, at one point the FireGL driver was separate from the fast-pass performance driver for both cards. Since at least... I want to say 2004 but I could be wrong... ATi has been shipping the performance driver and the workstation driver in the same FireGL / Radeon X package.

Which is where we get back to the FireGL driver certification.

Users who buy Workstation Cards do so for two reasons: guaranteed performance :: guaranteed support

Users buying professional workstation equipment expect a minimum level of performance that will always be delivered, and want somebody to throttle if that performance is not delivered. What AMD basically does is schedule their Certified Frglx release around, about every 3 months. They use this extra-time between releases to run a far more comprehensive battery of tests than those required to meet WHQL certification, and to make sure their driver is as stable as possible against a small number of rendering applications. That way they can turn around and tell the buyers of the professional cards that: "yes, your card will work with this driver in that application exactly as you expect it will."

Unfortunately, this support method in the past terrified ATi desktop consumers since ATi has been known to play around with OpenGL support between Professional Driver releases. I suspect this is what has happened with 10.7. Somebody was fiddling around with the OpenGL driver in preparation for the upcoming revamp in 10.8. It only "largely" affects CoH and Unigine since these are the only two commercially released applications that leverage OpenGL 3.x for fast-past rendering.

This is where we get into the standard code rant, and where TCS just annoys the living daylights out of me. The OpenGL driver is the OpenGL driver. Changes to one part of the driver, no matter how well intentioned, can have effects in other parts of the driver. So while somebody on the coding side could make a change that they think will improve accurate-pass rendering performance by 10%, they could have accidentally sent fast-pass rendering performance into the gutter.

Anybody whose been playing City of Heroes for a lengthy amount of time can tell you the number of times the developers have fixed one feature, only to have a completely different feature that should in no way be affected... break. (*cough, STF TOWERS, cough*)

Now, should you be running the Workstation Driver betas anyways? Probably not. This year has seen AMD of trying a semi-new policy of getting preview and beta release drivers into consumers hands before the official product actually launches. E.G. the public 10.4 beta, the OpenGL 4.0 preview driver, the 10.7 OpenGL 2.0 ES certified driver, and now the OpenGL revamp driver.

Hopefully this explains to anybody who was curious why AMD (and Nvidia for that matter) retain different driver releases for their workstation and consumer graphics cards, despite those drivers sharing the same source code and binary formats. -

Quote:If you know where to look, you can find the 10.8 Beta drivers.Yep, looks like that's the issue. I benchmarked in Unigine, and my average FPS was 51 for DX11 versus 17.5 for OpenGL. Guess I'll just need to wait for ATI to release an update. Thanks for the help, folks.

-

Quote:I prefer Unigine since it's "written" like a real game... which it would be because it is a real game engine.Oh and thinking about it, UNiGiNE benchmark programs support both Dx and OpenGL.

With 10.7 I have a huge slowdown in OpenGL on my own RadeonHD 4850's when compared to the DX10 renderer. I don't get that slow down on the 5770's I have on hand. On those, the DX10 and OpenGL renderers post pretty much the same scores at the same resolutions. -

No.

Maybe I should make this a little bit clearer. Modifying components of atiogl can, and more than likely will, result in a hard kernel crash and loss of data. Now if you happen to LIKE reinstalling Windows, by all means, feel free to try and edit the atiogl.xml or the atiogl.dll files. -

Quote:Remember; if you are able to isolate the problem to a driver update: Report the Bug to Nvidia: http://www.nvidia.com/object/driverq...assurance.htmlI had this same problem with my Nvidia 8800 GTX. I rolled back the drivers to 257 instead of 258 and that seems to have fixed it.